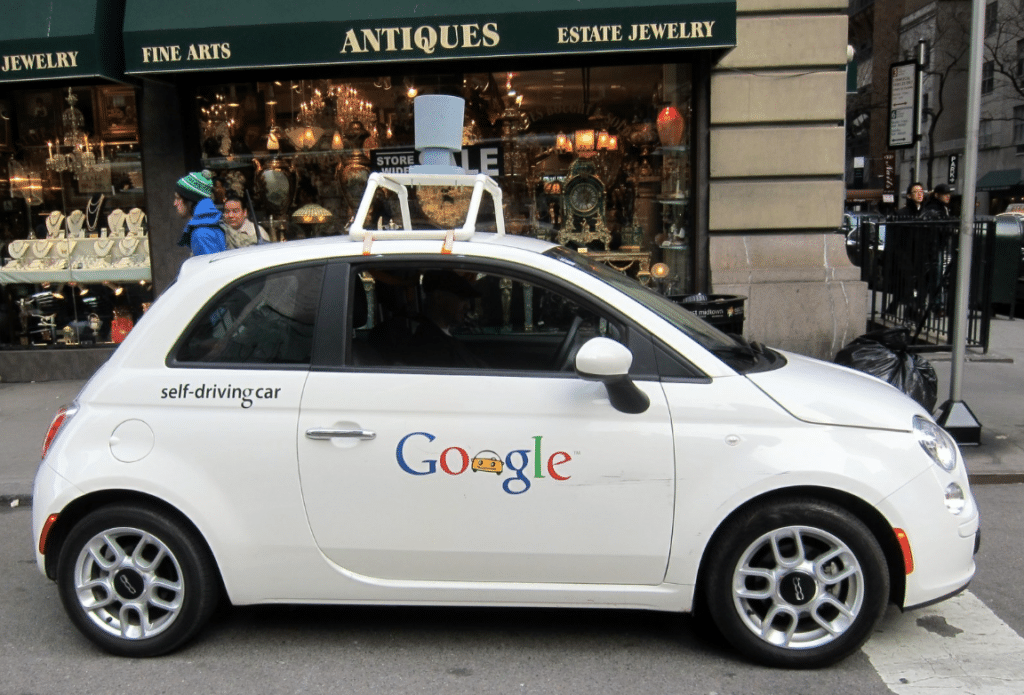

It has been widely written that politicians, insurance companies and lawyers will be among the many obstacles to quickly releasing self-driving cars into the mass market. However, I recently read an article which discusses certain moral issues that need to preliminarily be resolved as well.

In the article entitled “Why Self-Driing Cars Must Be Programmed To Kill”, the issue of ethically programming is discussed. Specifically, should the software be programmed to minimize loss of life, even if it means sacrificing the driver and occupants, or should it protect the occupants at all costs?

The article posits: “Imagine that in the not-too-distant future, you own a self-driving car. One day, while you are driving along, an unfortunate set of events causes the car to head toward a crowd of 10 people crossing the road. It cannot stop in time but it can avoid killing 10 people by steering into a wall. However, this collision would kill you, the owner and occupant. What should it do?”

Of course, many people will argue in favor of a utilitarian approach to minimize loss of life (i.e., that the car should avoid killing as many people as possible). But would you buy a car that is programmed to kill you over others?

Another moral dilemma involves motorcyclists. Should a self-driving car be programmed to hit a wall rather than a motorcyclists knowing that the threat to injury is great for a motorcyclists that the driver of the car?

While the technology is great and will reduce accidents, there are obviously serious ethical issues (among other things) that may take years to work out.